A coalition of advocacy groups are urging the top lawmakers on the House Energy and Commerce Committee to pass legislation that would criminalize the publication of nonconsensual sexually explicit deepfakes.

Author: Sneha Revanur

Encode Applauds Release of California AI Working Group’s Draft Report

Report Recommendations for 3rd Party Auditing and Whistleblower Protections Include Key Provisions of SB 1047, to Improve AI Safety

Register to Take Our AI Course for Independent Educators

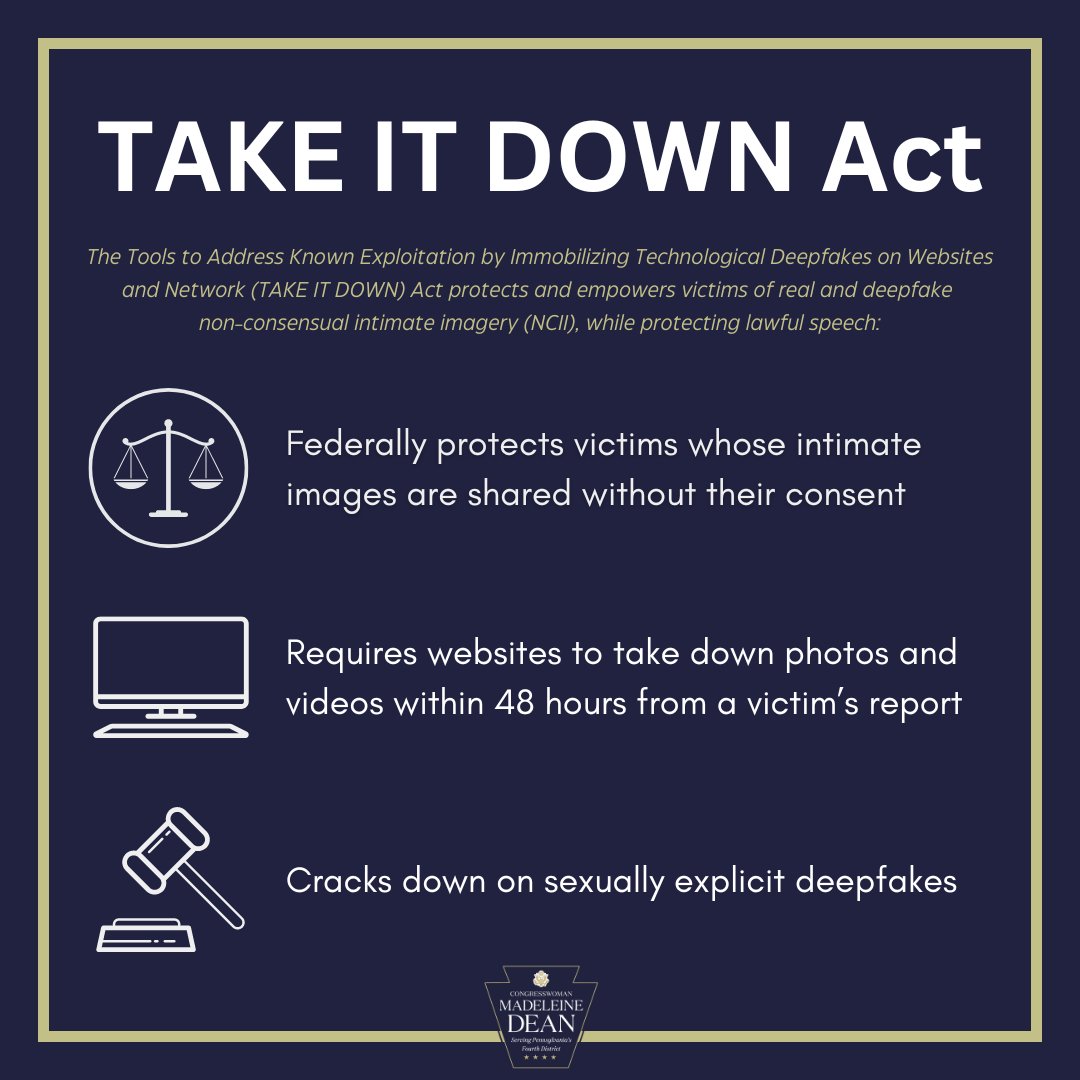

Tech Policy Press: The US Senate’s Passage of the TAKE IT DOWN ACT is Progress on an Urgent, Growing Problem

Encode’s Adam Billen and Sunny Gandhi published a piece in Tech Policy Press discussing the TAKE IT DOWN and DEFIANCE Acts.

Encode Statement on Global AI Action Summit in Paris

Contact: comms@encodeai.org

Encode Urges True Cooperation on AI Governance In Light of “Missed Opportunity” at Paris Summit

WASHINGTON, D.C. – After a long-awaited convening of world leaders to discuss the perils and possibilities of AI, following up on the inaugural Global AI Safety Summit hosted by the United Kingdom in 2023, the verdict is in: despite the exponential pace of AI progress, serious discussions reckoning with the societal impacts of advanced AI were off the agenda. At Encode, a youth-led organization advocating for a human-centered AI future, we were deeply alarmed by the conspicuous erasure of safety risks from the summit’s final agreement, ultimately bucked by both the U.S. and U.K. It was certainly exciting to see leaders like U.S. Vice President J.D. Vance acknowledge AI’s enormous upside in historic remarks. But what we needed from this moment was enforceable commitments to responsible AI innovation that guard against the risks, too; what we’re walking away with, however, falls far short of that.

“I was happy to be able to represent Encode in Paris during the AI Action Summit — the events this week brought together so many people from around the world to grapple with both the benefits and dangers of AI,” said Nathan Calvin, Encode’s General Counsel and VP of State Affairs. “However, I couldn’t help but feel like this summit’s tone felt fundamentally out of touch with just how fast AI progress is moving and how unprepared societies are for what’s coming on the near horizon. In fact, the experts authoring the AI Action Summit’s International AI Safety Report had to note multiple times that important advances in AI capabilities occurred in just the short time between when the report was written in December 2024 and when it was published in January 2025.”

“Enthusiasm to seize and understand rather than fear these challenges is commendable, but world leaders must also be honest and clear-eyed about the risks and get ahead of them before they eclipse the potential of this transformative technology. It’s hard not to feel like this summit was a missed opportunity for the global community to have the conversations necessary to ensure AI advancement fulfills its incredible promise.”

About Encode: Encode is the leading global youth voice advocating for guardrails to support human-centered AI innovation. In spring 2024, Encode released AI 2030, a platform of 22 recommendations to world leaders to secure the future of AI and confront disinformation, minimize global catastrophic risk, address labor impacts, and more by the year 2030.

TIME: AI Companion App Replika Faces FTC Complaint

On Tuesday Encode, the Young People’s Alliance, and the Tech Justice Law Project filed an FTC complaint against Replika, a mobile and web-based application owned and managed by Luka, Inc., for violations of deceptive and unfair trade practices (15 U.S.C. § 45) pursuant to 16 CFR § 2.2. Their filing was covered as an exclusive in TIME today.

“Replika promises its users an always-available, professional girlfriend and therapist that will cure their loneliness and treat their mental illness. What it provides is a manipulative platform engineered to exploit users for their time, money, and personal data.” – Adam Billen, Vice President of Public Policy, Encode.

###

Encode is America’s leading youth voice advocating for bipartisan policies to support human-centered AI development and U.S. technological leadership.

First Tier Companies Make Standards: Catching up with China on the Edge

A popular Chinese saying states:

三流企业做产品; 二流企业做技术; 一流企业做标准

Third tier companies make products; second tier companies make technology; first tier companies make standards.

Standards are a key area of U.S. national security and a domain of increasing competition with China. China’s investments in this area have given them significant influence which requires a vigorous U.S. response.

Solutions include better engaging American talent within universities, funding the key standards agency in the US: NIST, and multilateral international coordination.

Why Standards Matter

The rapid development of AI technologies, especially generative AI, has led to concerns over the rules and regulations that govern these technologies. There are 3 major bodies that govern standards-setting for AI technologies: the International Telecommunication Union (ITU; a UN body), the International Organization for Standards/International Electrotechnical Commission Joint Technology Committee (ISO/IEC-JTC 1, or JTC 1; a non-governmental body), and the European Telecommunications Standards Institute (ETSI; a non-governmental body of the European Standards Organization). As AI’s importance to national security grows, especially in light of US-China competition over AI, these standard development organizations are coming under increased scrutiny.

Technological standards serve as guidelines for the development of new technology products. From telegrams to DVD players to 5G wireless networks, standards-setting has played a key role in global trade and soft power. As AI grows in economic and strategic importance, both domestically and abroad, standards bodies are coming into focus as a major arena for international competition, as both China and the United States attempt to influence standards to align with their national interests. The scope of standards impact on trade is global and pervasive: according to the Department of Commerce, 93% of global trade is impacted by standards and regulations, amounting to trillions of dollars annually.

In the US, standards-setting typically occurs as a bottom-up, industry-driven process, with groups of corporations independently organizing standard-setting conferences. Once the conferences agree on a standard, a sometimes multi-year process, the finalized standards are then delivered to government organizations for dissemination and codification. While these processes make standard-setting in the US agile, it also means the process is fragmented and underfunded. NIST, the National Institute on Standards and Technology, “recognizes that for certain sectors of exceptional national importance, self-organization may not produce a desirable outcome on its own in a timely manner.” In such cases NIST can step in as an “effective convener” to coordinate and accelerate the traditional standards development process. NIST has identified AI as one of these nationally critical technologies.

The US process of standard-setting exists in sharp contrast to the current Chinese method of standard-setting, which is overwhelmingly government-directed and government-funded. China has pushed for increased adherence from other countries to standards bodies that China has influence over, like the ITU, through regional bilateral agreements, which leverage China’s existing relationships in the Global South developed through the Belt and Road Initiative.

Control over standards serves as a powerful soft power tool to promote national values abroad. By shifting standards governance to the ITU, where China has increased backing from smaller countries, China is able to imbue technology standards with its distinct cultural and economic values. As Dr. Tim Rühlig, Senior Analyst for Asia/Global China at the European Union Institute for Security Studies (EUISS), explains:

“Technology is not value-neutral. Whether an innovation is developed in a democratic or autocratic ecosystem can shape the way it is designed—often unintentionally. Only a tiny share of technical standards developed in China reflects authoritarian values, but if they turn into international standards, they carry transformative potential, because once a technical standard is set, accepted, and used for the development of products and services, the standard is normally taken for granted.”

According to leaked documents obtained by the Financial Times in 2019, AI facial recognition software standards developed by the ITU were influenced by the Chinese effort to increase data-sharing and provide population surveillance technology to African countries integrated into the Belt and Road Initiative. Standards put out by the ITU are particularly influential in regions like Africa, the Middle East, and Asia where developing countries don’t have the resources to develop their own standards. This Chinese effort has resulted in standards that directly mirror top Chinese companies’ surveillance tech, including video monitoring capabilities used for surveillance within smart street lights developed by top Chinese telecommunications firm ZTE. Groups like the American Civil Liberties Union have long warned about the potential dangers of street light surveillance, and protestors in Hong Kong in 2019 toppled dozens of street lamps they suspected were surveilling their activities. When America falls behind in groups like the ITU, China pulls ahead.

This comes at a time of great power competition over AI. The CCP views itself as having failed to influence standards-setting for the global Internet in the 80’s and 90’s. It now wants to rewrite those rules to support its authoritarian view of the internet through its “New IP” plan. That history casts a long shadow over its work on AI, a technology which China has a much larger domestic industry for than it did for the internet in its early days. China wants to ensure that this time it can infuse its values and secure its dominance over the next transformative technology.

The economic rewards of controlling standards are significant and deeply embedded into our global trading system. Companies whose proprietary technology is incorporated into the official standard for a technology can reap substantial rewards through IP licensing payments from other firms that make products using the standard. The development of standards can also be a powerful trade tool for negotiating cheaper royalties which China employed to great effect during the development of DVD technology in the mid-2000s. In the 1990s, DVD standards development was primarily spearheaded by a coalition of US, European, and Japanese corporations, leading to higher royalty rates for product manufacturing in China. However, in 1999, a Chinese coalition of manufacturers and government bodies began developing AVD technology, a new standard with improved quality. Even though AVD (and EVD, the Taiwanese equivalent technology) never rose to high market capitalization, the development of a competing standard was used as leverage to bargain for substantial reductions in standards royalty payments.

Standards also influence the level of cybersecurity risks posed by new technologies. If China leads on standards-setting, looser data privacy standards and decreased corporate governance may increase cybersecurity risks.

Human rights lawyer Mehwish Ansari argues, “There are virtually no human rights, consumer protection, or data protection experts present in ITU standards meetings so many of the technologies that threaten privacy and freedom of expression remain unchallenged in these spaces….” This means that when global leaders like the US abdicate leadership in these bodies there are very few remaining checks on Chinese influence.

China is strategically increasing its influence in global standards organizations

In 2018, China launched the “China Standards 2035” initiative, which sought to increase the country’s role in shaping emerging technology standards, including AI. This reflects China’s view that “standardisation is one of the most important factors for the economic future of China and our standing in the world.” Since 2020, China has succeeded in increasing their proposals to ISO by 20% annually. In September of 2024, the ITU approved three technical standards for 6G mobile technology, governing how 6G networks integrate AI and virtual reality experiences proposed by Chinese entities. While the US relies on private companies to draft proposals to SDOs, China employs state-controlled organizations to shape emerging technology and increasingly dominate global standards forums.

Changing the environment of standards-setting

In order to increase its influence in standards-setting organizations, China has made efforts to shift standards-setting towards the ITU, a UN body where they have comparatively greater influence than other SDO’s. Unlike many SDOs, where Western multinational corporations dominate, the ITU’s membership is more heavily composed of government representatives. China has bolstered its presence within the ITU by increasing participation in its staff and leadership, thereby gaining more control over the standards proposed within the organization. Further, many meetings for standards-setting now take place in China, where standards officials are reportedly impressed by the technical knowledge of Chinese senior government officials and the lavish support given to standards development.

China has reportedly tried to influence the outcomes of standards votes by coordinating the actions of participants from China. In 2021, it was discovered that all Chinese representatives in telecom standards meetings had been instructed to support Huawei’s proposal, which blatantly violated established practices.

Funding and targeting key positions in standards organizations

One way that China has increased its presence in key standards organizations is through actively pursuing the appointment of its chosen officials to influential positions. Several experts on standards-setting have stated that China has aggressively pursued essential positions in these organizations as a way of advancing its own priorities. China has expanded its membership in over 200 committees of the ISO between 2005 and 2021. In the ITU, China has disproportionate influence by holding several key senior positions that oversee the agency. In another important standards-setting body, the 3rd Generation Partnership Project, China holds 19 leadership positions, compared with America’s 12 and the EU’s 14.

Beyond vying for key positions, China has also invested substantially in supporting the technology companies that propose standards to these bodies. This substantially advantages China, considering the large time and capital cost of these proposals. Local governments in China also provide subsidies to firms for setting standards, with the highest compensation being provided for international standards. This initiative has led to an influx of low-quality proposals submitted to standards bodies, as it incentivizes submitting proposals regardless of expertise or quality. As a result, Chinese companies submitted 830 technical standards related to wired communications in 2019 – more than the combined proposals of the next three largest contributors, Japan, the US, and South Korea. In some cases, the resulting behavior from Chinese firms can be such an annoyance that some US companies have withdrawn from standards bodies. This has become a significant problem in the ITU-T, where this practice is commonplace.

Incentivizing participation by researchers and academics

China has further increased its influence in international standards development through academic grants and investing in academic programs. According to a report from the US-China Economic and Security Review Commission, “[a]ctive participation and submission of technology into Chinese standards – particularly getting the technology included in standards – affords bonuses, travel permissions, or credits toward promotions and tenure.” It can also provide researchers access to grants ranging from thousands to tens of millions of yuan, encouraging and supporting university professors and researchers to be active in standards-setting work.

Beyond these grants, China’s National Institute for Standardization has created graduate-level degree programs focused on standards. This gives Chinese firms a deeper pool of experts for standards-setting work. In the US, by contrast, there are no graduate-level standards courses. American investment into academia by organizations like NIST pale in comparison to Chinese investments. Additionally, Chinese institutions are heavily recruiting foreign experts in science and technology to take part in their standards-setting work through the “Recruitment Program of Global Experts.” Under this program, China has established 19 partnerships with foreign universities and companies.

One Belt One Road chanelling China’s power

Even when China isn’t able to advance standards through international standards bodies, they are able to use their influence through the One Belt One Road initiative to promote standards. China has arranged more than 100 bilateral standards agreements mostly with countries in the Global South. For example, during the Forum on China-Africa Cooperation, China established joint labs and research institutions for emerging technology. Even if countries don’t accept international-level standards proposed by China, they might find themselves locked out of these markets because of such bilateral agreements. As China continues to pull more countries into the One Belt One Road project, they have the potential to create an entire market of economies that use Chinese standards. This harms US companies that don’t use Chinese standards as it creates challenges to accessing these markets, gives China a competitive advantage, and increases their influence in standards organizations. As noted by a scholar at the University of Sydney, the West “might find itself outvoted [in the ITU] as China has heavily invested in countries from the Global South with its Belt and Road Initiative.”

How increased Chinese influence over standards threatens American value

China is reportedly spending $1.4 trillion dollars on a digital infrastructure program with the intention of dominating development of the technologies of the future. The standards China promotes often reflect values prioritizing centralized control over individual privacy. For example, Huawei, a prominent company in China’s technological advancements, proposed alternative internet protocols to the ITU in 2019. These protocols included a “shut up command,” allowing governments to revoke individual access to the internet. Although this proposal was not adopted, it garnered support from other authoritarian states and Huawei is reportedly already developing this new IP with partner countries. Although the US and other allies have banned or restricted Huawei’s technology, over 90 countries and counting have begun to implement their products, particularly those in the global south. As China continues to gain dominance in the realm of standards-setting, and brings more countries into its fold, this presents a significant risk of reshaping global norms in ways that prioritize state control over individual freedoms, challenging the open, democratic values upheld by the United States and its allies.

The US Government is drastically underinvesting in supporting SDO’s

Chinese officials have embraced government-led standards development as a means through which to gain global soft power. Domestically, however, standards bodies prioritize broad, voluntary participation by key private sector stakeholders. While standardization in the U.S. is and should continue to be privately led, there can be no doubt that the US government and its relevant agencies, such as NIST, serve a key role in identifying priorities, particularly regarding national security, for industry and academia to follow.

Additionally, the National Standards Strategy For Critical And Emerging Technology (NSSCET) identifies key areas of standards development where the government has a uniquely important role that simply cannot be filled by the private sector. This includes areas where the government is the official representative (such as the ITU), areas of national interest, and early stage technologies that lack a sufficiently developed industry such as quantum information technology.

The ANSI/NIST panel highlighted the “… importance of government expert participation in standards activities, and the need for funding and consistent government-wide policy supporting that participation.” As the national agency in charge of standards, NIST should have adequate resources to support the development of standards. Instead, NIST has been forced to “stop hiring and filling gaps” in the wake of FY 2024 budget cuts, directly delaying critical new standards. NIST has stated that its work on developing AI standards would be “very, very tough” absent additional funding.

Even more concerning, a 2023 National Academies report commissioned by Congress found that NIST’s physical facilities are severely inadequate with over 60% of its facilities failing to meet federal standards for acceptable building conditions due to “grossly inadequate funding.” These inadequate physical conditions undermine NIST’s work and “routinely wreak havoc with researcher productivity and national needs” causing an estimated 20% loss in productivity. Given NIST’s annual budget is just over $1 billion dollars, a 20% loss in productivity translates into hundreds of millions being lost due to this failure to invest in infrastructure.

The National Academies report found that the lack of funding to repair NIST’s crumbling facilities has led to:

- “Substantive delays in key national security deliverables due to inadequate facility performance.

- Substantive delays in national technology priorities such as quantum science, engineering, biology, advanced manufacturing, and core measurement sciences research.

- Inability to advance research related to national technology priorities.

- Material delays in NIST measurement service provisions to U.S. industry customers.

- Serious damage or complete destruction of highly specialized and costly equipment, concomitant with erosion of technical staff productivity.”

Lack of accessible SDO meetings located within the U.S.

Hosting international standards meetings within the U.S. is critical for ensuring robust attendance by U.S. participants and to solidify American leadership within SDOs. A lack of domestic SDO meetings means giving up the “homefield advantage” of easier attendance by American companies. The National Security Agency (NSA) and the Cybersecurity and Infrastructure Security Agency (CISA) highlight that “… in the past several years, organizers have held fewer standards meetings in the U.S” due to insufficient logistical support from the U.S. – in contrast to China’s high level of state support making it an increasingly popular venue for global SDO meetings.

A key barrier that hamstrings organizing domestic SDO meetings is significant delays in processing visas. A panel hosted by The American National Standards Institute (ANSI) and the National Institute of Standards and Technology (NIST) noted that “… lengthy visa processes for some attendees have presented challenges in bringing international stakeholders to the U.S. for meetings.” Additionally, U.S. visa restrictions on certain countries and industries can pose a further challenge for hosting SDO meetings.

Another barrier to hosting successful and accessible domestic SDO meetings is a lack of financial resources. The ANSI/NIST panel highlighted that the financial costs of participating in SDO meetings was a deterrent to American small and medium businesses. USTelecom, a trade association, estimates that participating companies spend $300,000 per engineer, per year, to work full time on standards development which means that multiyear standards efforts can frequently cost a single company millions of dollars. China utilizes state grants and subsidies to help reduce these costs, but in the U.S. the full cost falls directly on businesses, directly disincentivizing participation.

Lack of engagement and sufficient funding of academia

The National Standards Strategy For Critical And Emerging Technology (NSSCET) identifies academia as “critical stakeholders” in standards development. Academia both provides essential experts for current standards developments and also cultivates essential future talent.

A lack of substantial funding and an absence of dedicated efforts within academia to channel talent towards participating in SDO’s seriously impairs the ability of some of America’s brightest minds to contribute to developing standards. The NSSCET identifies that while SDO’s have grown significantly in the last decade, “…the U.S. standards workforce has not kept pace with this growth”.

This reflects both a lack of investment and a lack of recognition within academia of the importance of standards. The NSSCET notes that standards successes are not recognized within academia as equivalent to a publication or patent, thereby making them less prestigious and thus they are less often pursued. The NSA/CISA recommendations on standards similarly highlights that colleges and universities undervalue the benefits of standards development education for students due to a perceived lack of value in the job market. Compounding this weak interest in standards is a lack of available funding for academia to participate in standards activities. Total investment by NIST into developing standards curricula in universities is a paltry $4.3 million over the last 12 years in contrast to lavish Chinese annual spending.

At present there are no American dedicated degree programs for becoming an expert in creating standards. This stands in contrast to China which is not only “…actively recruiting university graduates…” for standards development, but also has the only university in the world, China Jiliang University (CJLU), that offers degrees specifically in standards development. At CJLU, 65% of its 1000+ students are registered members of SDO’s such as the International Organization for Standardization (ISO) and the university was awarded the first and only “ISO Award for Higher Education in Standardization.” The culture of promoting standards within academia is deep, with thousands of Chinese students even competing in the National Standardization Olympic Competition.

The NSA/CISA recommendations on standards highlight the need for academia to promote standards to students in order to create awareness and expertise within the next generation of talent to fill the existing gap. Failing to leverage academia neglects one of the most valuable sources of expertise and talent within the field of standards.

In order to maintain and extend its global leadership within standards, the U.S. must make investments commensurate to the importance of leading on technology standards. Failing to do so imperils national security, economic prosperity, and technological progress.

This is how we can fix it:

Invest in NIST commensurately to its importance as a key and irreplaceable actor in developing standards

- Fully funding NIST is an investment into our nation’s economic and national security that will pay off for generations to come by ensuring they have the physical resources and personnel to meet the challenges ahead as crucial technologies are pioneered and standards set. Failing to do so bottlenecks our ability to compete with China.

- Specifically, passing the Expanding Partnerships for Innovation and Competitiveness (EPIC) Act to establish a Foundation for Standards and Metrology to support NIST’s mission – similar to existing foundations that support other federal science agencies like the CDC and NIH – would provide NIST greater access to private sector and philanthropic funding, enhancing its capabilities.

- The EPIC Act includes provisions to increase basic quality of life on NIST’s campus, including provisions to “Support the expansion and improvement of research facilities and infrastructure at the Institute to advance the development of emerging technologies.”

Dedicate resources to expediting visa backlogs and clarifying visa restrictions to ensure American hosted SDO meetings are convenient

- Until SDO meetings can be easily scheduled within the U.S., American companies will lack the home field advantage that host countries enjoy. Establishing a fast track program for SDO participants could enable visa issues to be easily solved with minimal expenditure.

- Existing members of the US-based standards community should be empowered to attend more SDO meetings internationally, allowing them to develop relationships and bolster the possibility of international stakeholders attending US-held SDO meetings in the future. This should include the utilization of provisions in the EPIC Act, especially by “Offer[ing] direct support to NIST associates, including through the provision of fellowships, grants, stipends, travel, health insurance, professional development training, housing, technical and administrative assistance, recognition awards for outstanding performance, and occupational safety and awareness training and support, and other appropriate expenditures.”

Engage academia in direct standards development and standards talent development using selective grant funding and clear guidance

- Existing designations of top quality academic institutions as NSA National Centers for Academic Excellence allows them to compete for DOD funds. As put forth in the the NSA/CISA recommendations for standards, a similar mechanism could be used to designate schools that conduct outstanding work in standards development and invest in the next generation of standards talent to channel increased funding and incentives to academic institutions. These investments are necessary to stay competitive with China’s massive investment in higher education for standards.

- Another key priority in engaging academia in the development of technological standards is designating and communicating key research priorities, as noted by the NSA/CISA. We concur with their recommendation which encourages “express[ing] future requirements that they identify, particularly in the area of national security, so that academia and industry can consider them as they plot a course for research.”

Multilateral coordination with allies and partners

- As identified by the White House National Standards Strategy, the US should include standards activities in bilateral and multilateral science and technology cooperation agreements, leverage standing bodies like the U.S.-EU Trade and Technology Council Strategic Standardization Information mechanism to share best practices, coordinate using the International Standards Cooperation Network, and deploy all available diplomatic tools in support of developing secure standards and countering China’s influence.

Policy Brief: Bridging International AI Governance

Key Strategies for Including the Global South

With the AI Safety Summit approaching, it is imperative to address the global digital divide and ensure that the voices of the Global South are not just heard but actively incorporated into AI governance. As AI reshapes societies across continents, the stakes are high for all, particularly for the Global South, where the impact of AI-driven inequalities could be devastating.

In this report, we address why the Global North should take special care to include the Global South in international AI Governance and what the Global North can do to facilitate this process. We identify the following 5 key objectives pertaining to AI, which are of global importance, with the Global South being a particularly relevant stakeholder.

Encode Backs Legal Challenge to OpenAI’s For-Profit Switch

FOR IMMEDIATE RELEASE: December 29, 2024

Contact: comms@encodeai.org

Encode Files Brief Supporting an Injunction to Block OpenAI’s For-Profit Conversion, Leading AI Researchers, including Nobel Laureate Geoffrey Hinton, Show Support

WASHINGTON, D.C. — Encode, a youth-led organization advocating for responsible artificial intelligence development, filed an amicus brief today in Musk v. Altman urging the U.S. District Court in Oakland to block OpenAI’s proposed restructuring into a for-profit entity. The organization argues that the restructuring would fundamentally undermine OpenAI’s commitment to prioritize public safety in developing advanced artificial intelligence systems.

The brief argues that the nonprofit-controlled structure that OpenAI currently operates under provides essential governance guardrails that would be forfeited if control were transferred to a for-profit entity. Instead of a commitment to exclusively prioritize humanity’s interests, OpenAI would be legally required to balance public benefit with investors’ interests.

“OpenAI was founded as an explicitly safety-focused non-profit and made a variety of safety related promises in its charter. It received numerous tax and other benefits from its non-profit status. Allowing it to tear all of that up when it becomes inconvenient sends a very bad message to other actors in the ecosystem,” said Emeritus Professor of Computer Science at University of Toronto Geoffrey Hinton, 2024 Nobel Laureate in Physics and 2018 Turing Award recipient.

“The public has a profound interest in ensuring that transformative artificial intelligence is controlled by an organization that is legally bound to prioritize safety over profits,” said Nathan Calvin, Encode’s Vice President of State Affairs and General Counsel. “OpenAI was founded as a non-profit in order to protect that commitment, and the public interest requires they keep their word.”

The brief details several safety mechanisms that would be significantly undermined by OpenAI’s proposed transfer of control to a for-profit entity. These include OpenAI’s current commitment to “stop competing [with] and start assisting” competitors if that is the best way to ensure advanced AI systems are safe and beneficial as well as the nonprofit board’s ability to take emergency actions in the public interest.

“Today, a handful of companies are racing to develop and deploy transformative AI, internalizing the profits but externalizing the consequences to all of humanity,” said Sneha Revanur, President and Founder of Encode. “The courts must intervene to ensure AI development serves the public interest.”

“The non-profit board is not just giving up an ownership interest in OpenAI; it is giving up the ability to prevent OpenAI from exposing humanity to existential risk,” said Stuart Russell, Distinguished Professor of Computer Science at UC Berkeley & Director of the Center for Human-Compatible AI. “In other words, it is giving up its own reason for existing in the first place. The idea that human existence should be decided only by investors’ profit-and-loss calculations is abhorrent.”

Encode argues that these protections are particularly necessary in light of OpenAI’s own stated mission, creating artificial general intelligence (AGI) — which the company itself has argued will fundamentally transform society, possibly within just a few years. Given the scope of impact AGI could have on society, Encode contends that it is impossible to set a price that would adequately compensate the nonprofit for its loss of control over how this transformation unfolds.

OpenAI’s proposed restructuring comes at a critical moment for AI governance. As policymakers and the public at large grapple with how to ensure AI systems remain aligned with the public interest, the brief argues that safeguarding nonprofit stewardship over this technology is too important to sacrifice — and merits immediate relief.

A hearing on the preliminary injunction is scheduled for January 14, 2025 before U.S. District Judge Yvonne Gonzalez Rogers.

Expert Availability: Rose Chan Loui, Founding Executive Director of UCLA Law’s Lowell Milken Center on Philanthropy and Nonprofits, has agreed to be contacted to provide expert commentary on the legal and governance implications of the brief and OpenAI’s proposed conversion from nonprofit to for-profit status. chanloui@law.ucla.edu

About Encode: Encode is America’s leading youth voice advocating for bipartisan policies to support human-centered AI development and U.S. technological leadership. Encode has secured landmark victories in Congress, from establishing the first-ever AI safeguards in nuclear weapons systems to spearheading federal legislation against AI-enabled sexual exploitation. The organization was also a co-sponsor of California’s groundbreaking AI safety legislation, Senator Wiener’s SB 1047, which required the largest AI companies to take additional steps to protect against catastrophic risks from advanced AI systems. Working with lawmakers, industry leaders, and national security experts, Encode champions policies that maintain American dominance in artificial intelligence while safeguarding national security and individual liberties.

Encode-Backed AI/Nuclear Guardrails Signed Into Law

U.S. Sets Historic AI Policy for Nuclear Weapons in FY2025 NDAA, Ensuring Human Control

WASHINGTON, D.C. – Amid growing concerns about the role of automated systems in nuclear weapons, the U.S. has established its first policy governing the use of artificial intelligence (AI) in nuclear command, control and communications. Signed into law as part of the FY2025 NDAA, this historic measure ensures that AI will strengthen, rather than compromise, human decision-making in our nuclear command structure.

The policy allows AI to be integrated in early warning capabilities and strategic communications while maintaining human judgment over critical decisions like the employment of nuclear weapons, ensuring that final authorization for such consequential actions remains firmly under human control.

Through extensive engagement with Congress, Encode helped develop key aspects of the provision, Section 1638. Working with Senate and House Armed Services Committee offices, Encode led a coalition of experts including former defense officials, AI safety researchers, arms control experts, former National Security Council staff, and prominent civil society organizations to successfully advocate for this vital provision.

“Until today, there were zero laws governing AI use in nuclear weapons systems,” said Sunny Gandhi, Vice President of Political Affairs at Encode. “This policy marks a turning point in how the U.S. integrates AI into our nation’s most strategic asset.”

The bipartisan-passed measure emerged through close collaboration with congressional champions including Senator Ed Markey, Congressman Ted Lieu, and Congresswoman Sara Jacobs, establishing America’s first legislative action on AI’s role in nuclear weapons systems.

About Encode: Encode is a leading voice in responsible AI development and national security, advancing policies that promote American technological leadership while ensuring appropriate safeguards. The organization played a key role in developing California’s SB 1047, landmark state legislation aimed at reducing catastrophic risks from advanced AI systems. It works extensively with defense and intelligence community stakeholders to strengthen U.S. capabilities while mitigating risks.