Encode celebrates key victory following months of advocacy for bipartisan bill to combat online intimate image abuse.

Author: Sneha Revanur

School District Brief: Safeguards to Prevent Deepfake Sexual Abuse in Schools

Introduction / State of the Problem

Technologists and academics have been warning the public for years about the proliferation of non-consensual sexual deepfakes, altered or artificial non-consensual pornography of real people. Today, a potential abuser just needs access to a web browser and internet connection to freely create hundreds or thousands of non-consensual intimate images. 96% of deepfake videos online are non-consensual pornographic videos, and 99% of them target women. Alarmingly, we are now seeing a pattern of boys as young as 13 using these tools to target their female classmates with deepfake sexual abuse.

On October 20, 2023, young female students at Westfield High School in New Jersey discovered that teenage boys at the school had taken fully-clothed photos of them and used an AI app to alter them into sexually explicit, fabricated photos for public circulation. One of the female victims revealed that it was not just one male student, but a group using “upwards of a dozen girls’ images to make AI pornography.” In the same month, halfway across the country at Aledo High School in Texas, a teenage boy generated nude images of ten female classmates. The victims, who sought help from the school, the sheriff’s office, and the social media apps, struggled to stop the photos from spreading for over eight months: “at that point, they didn’t know how far it spread”. At Issaquah High School in Washington, another teenage boy circulated deepfake nude images of “at least six 14-to-15-year-old female classmates and allegedly a school official” on popular image-based social media app Snapchat. While school staff knew about the images, the police only heard about the incident through parents who independently reached out to file sex offense reports. Four months later, the same incident occurred in Beverly Hills, California — and the sixteen victims were only in middle school.

Consequences of Inaction / Lack of Appropriate Guidelines

In almost every case of deepfake sexual abuse in schools, administrators and district officials were caught off guard and unprepared; even when relevant guidelines existed. At Westfield High School, school administrators conducted initial investigation with the alleged perpetrators and police present, without their parents and lawyers, making all collected evidence inadmissible in court. Because of the school negligence, the victims could not seek accountability and still do not know “the exact identities or the number of people who created the images, how many were made, or if they still exist”. At Issaquah High School, when a police detective inquired about why the school had not reported the incident, school officials questioned why they would be required to report “fake images”. Issaquah’s Child Abuse, Neglect, and Exploitation Prevention Procedure states that in cases of sexual abuse, reports to law enforcement or Child Protective Services must be made “at the first opportunity, but in no case longer than forty-eight hours”. Yet, because fake images are not directly named in the policy, the school did not file a report until six days later— and not without multiple reminders from the police about the school’s duty as mandatory reporters. In Beverly Hills, California, administrators acted more swiftly in expelling the five students responsible. Still, the perpetrators retained full anonymity, while the images of victims were permanently made public, attaching their faces to a nude body. Victims shared struggles with feelings of anxiety, shame, isolation, an inability to focus at school, and serious concerns about reputational damage, future repercussions with job prospects, and the possibility that photos could resurface at any point.

A Path Forward:

Deepfake sexual abuse is not inevitable: it is possible and necessary for schools to implement concrete preventative and reactive measures. Even before an incident has occurred, schools can protect students by setting standards for acceptable and unacceptable behavior, educating staff, and modifying existing policies to account for such incidents.

Many schools have existing procedures related to sexual harassment and cyberbullying issues. However, standard practices to handle digital sexual abuse via deepfakes have yet to materialize. Existing procedures non-specific to this area have been ineffective, resulting in the exposure of victims’ identities, week-long delays while pornographic images are circulated amongst peers, and failures to report incidents to law enforcement in a timely manner. School action plans to address these risks should incorporate the following considerations:

- Deepfake sexual abuse incidents in schools follow a similar pattern: students feed fully-clothed images of their peers into an AI application to manipulate them into sexually explicit images and circulate them through social media platforms like Snapchat. The apps used to create and distribute deepfake sexual images are easily accessible to most students, who recklessly disregard the grave consequences their actions hold. Schools must update their codes of conduct, sexual harassment and abuse, harassment, intimidation and abuse, cyberbullying and AI policies to clearly ban the creation and dissemination of deepfake sexual imagery. Those updated policies should be clearly communicated through school wide events and announcements, orientation, and consent or sexual education curricula. Schools must clearly communicate to students the seriousness of the issue and the severity of the consequences, setting a clear precedent for action before crises occur.

- Appropriate consequences for perpetrators: The lack of appropriate consequences for the creation and dissemination of deepfake sexual imagery will undermine efforts to deter such behavior. Across recent incidents, most schools failed to identify all perpetrators involved in incidents or deliver reasonable consequences for the serious harm caused as a result of their actions. Westfield High School suspended a male student accused of fabricating the images for one or two days; victims and families shared that the perpetrators at Aledo High School received “probation, a slap on the wrist… [that will] be expunged. But these pictures could forever be out there of our girls.” To deter perpetrators and protect victims, schools should establish guidelines for determining consequences and a system for stakeholders that should be involved in the determination of what consequences there will be and which parties will carry them out. Even in cases where the school needs to involve local authorities, there should be school-specific consequences such as suspension or expulsion.

- Equivalence of real images and deepfake generated images: Issaquah failed to address its deepfake sexual abuse incident because school administrators were unsure whether existing sexual abuse policies applied to generated images. Procedures addressing sexual abuse incidents must be updated to treat the creation and distribution of non-consensual sexual deepfake images the same as real images. For example, an incident that involves creating deepfake porn should be treated with the same seriousness as an incident that involves non-consensually photographing someone nude in a locker room. Deepfake sexual abuse incidents require the same rigorous investigative and reporting process as other sexual abuse incidents because their consequences are similarly harmful to victims and the larger school community.

- Standard procedures to reduce harms experienced by victims: At Westfield High School, victims discovered their photos were used to generate deepfake pornography after their names were announced over the school-wide intercom. Not only did victims feel that it was a violation of privacy to have their identities exposed to the entire student body, but the boys who generated the images were privately pulled aside for investigation. Schools should have established, written procedures to discreetly inform relevant authorities about incidents and to support victims at the start of an investigation on deepfake sexual abuse. After procedures are established, educators should be made aware of relevant school procedures for protecting victims through dedicated training.

Case Study: Seattle Public School District

The incidents that have sounded the alarm bells on this issue are only the ones that have been reported in large news outlets. Such incidents are likely occurring all over the country without much media attention. The action we’re seeing today is largely the result of a few young, brave advocates using their own experiences as a platform to give voice to this issue – and it is time that we listen. Seattle Public Schools, like most districts around the country, has not yet had a high profile incident. A review of its code of conduct, sexual harassment policy, and cyber bullying policy, similar to those of many other schools, reveals a lack of preparedness in preventing and responding to potential deepfake sexual abuse within schools. Below is a case study of how the aforementioned considerations may apply to bolster Seattle’s district policies:

- Code of conduct: Seattle Public School District’s code of conduct, revised and re-approved every year by the Board of Education, contains policy on acceptable student behavior and standard disciplinary procedures. Conduct that is “substantially interfering with a student’s education […] determined by considering a targeted student’s grades, attendance, demeanor, interaction with peers, interest and participation in activities, and other indicators” merits a “disciplinary response.” Furthermore, “substantial disruption includes but is not limited to: significant interference with instruction, school operations or school activities… or a hostile environment that significantly interferes with a student’s education.” Deepfake sexual abuse incidents falls squarely under this conduct, affecting a victim’s ability to focus and interact with teachers and peers. Generating and electronically distributing pornographic images of fellow students outside of school hours or off-campus falls within the school’s purview under their off-campus student behavior policy, as it causes a substantial disruption to on-campus activities and interferes with the right of students to safely receive their education. Furthermore, past instances have shown that these incidents spread rapidly and become a topic of conversation that continues into the school day, especially when handled without sensitivity for victims, creating a hostile environment for students.

- Sexual harassment policy: Existing policy states that sexual harassment includes “unwelcome sexual or gender-directed conduct or communication that creates an intimidating, hostile, or offensive environment or interferes with an individual’s educational performance”. Deepfake pornography, which has been non-consensual and directed toward young girls in every high profile case thus far, should be considered a form of “conduct or communication” that is prohibited under this policy. The Superintendent has a duty to “develop procedures to provide age-appropriate information to district staff, students, parents, and volunteers regarding this policy… [which] include a plan for implementing programs and trainings designed to enhance the recognition and prevention of sexual harassment.” Such policies should reflect the most recent Title IX regulations, effective August 1st, which state that “non-consensual distribution of intimate images including authentic images and images that have been altered or generated by artificial intelligence (AI) technologies” are considered a form of online sexual harassment. Revisions made to sexual harassment policy should be made clear to all school staff through dedicated training.

- Cyber bullying policy: Deepfake sexual abuse is also a clear case of cyberbullying. As defined by the Seattle Public Schools, “harassment, intimidation, or bullying may take many forms including, but not limited to, slurs, rumors, jokes, innuendoes, demeaning comments, drawings, cartoons… or other written, oral, physical or electronically transmitted messages or images directed toward a student.” Furthermore, the act is specified as one that “has the effect of substantially interfering with a student’s education”, “creates an intimidating or threatening educational environment”, and/or “has the effect of substantially disrupting the orderly operation of school”. From what victims have shared about their anxiety, inability to focus in school, and newfound mistrust toward those around them, it is evident that deepfake sexual abuse constitutes cyberbullying – at a minimum. However, because the proliferation of generated pornography is so recent, school administrators may be uncertain how existing policy applies to such incidents. Therefore, this policy should be revised to directly address generated visual content. For instance, “electronically transmitted messages or images directed toward a student” may be revised to “electronically generated or transmitted messages or images directed toward or depicting a student”.

Conclusion

Deepfake pornography can be created in seconds, yet follows victims for the rest of their lives. Perpetrators today are emboldened by free and rapid access to deepfake technology and school environments that fail to hold them accountable. School districts’ inaction has resulted in the proliferation of deepfake sexual abuse incidents nationwide, leaving countless victims with little recourse and irreversible trauma. It is critical for schools to take immediate action to protect students, especially young girls, by incorporating safeguards within school policies: address the equivalence of generated and real images within their codes of conduct, sexual harassment policies, and cyber bullying policies, setting guidelines to protect victims and determine consequences for perpetrators, and ensuring all staff are aware of these changes. By taking these steps, schools can create a safer environment, ensuring that students receive the protection and justice they deserve, and deterring future incidents of deepfake sexual abuse.

Encode’s VP of Policy Publishes Seattle Times Op-Ed

Encode’s Adam Billen publishes op-ed addressing steps schools and lawmakers can take to combat deepfake nudes in schools in the Seattle Times.

Encode & ARI Coalition Letter: The DEFIANCE and TAKE IT DOWN Acts

FOR IMMEDIATE RELEASE: Dec 5, 2024

Contact: adam@encodeai.org

Tech Policy Leaders Launch Major Push for AI Deepfake Legislation

Major Initiative Unites Child Safety Advocates, Tech Experts Behind Senate-Passed Bills

WASHINGTON, D.C. – Encode and Americans for Responsible Innovation (ARI) today led a coalition of over 30 organizations calling on House leadership to advance crucial legislation addressing non-consensual AI-generated deepfakes. As first reported by Axios, the joint letter urges immediate passage of two bipartisan bills: the DEFIANCE Act and TAKE IT DOWN Act, both of which have cleared the Senate with strong support.

“This unprecedented coalition demonstrates the urgency of addressing deepfake nudes before they become an unstoppable crisis,” said Encode VP of Public Policy Adam Billen. “AI-generated nudes are flooding our schools and communities, robbing our children of the safe upbringing they deserve. The DEFIANCE and TAKE IT DOWN Acts are a rare, bipartisan opportunity for Congress to get ahead of a technological challenge before it’s too late.”

The coalition spans leading victim support organizations such as the Sexual Violence Prevention Association, RAINN, and Raven, major technology policy organizations like the Software Information and Industry Association and the Center for AI and Digital Policy, and prominent advocacy groups including the American Principles Project, Common Sense Media and Public Citizen.

The legislation targets a growing digital threat: AI-generated non-consensual intimate imagery. Under the DEFIANCE Act, survivors gain the right to pursue civil action against perpetrators, while the TAKE IT DOWN Act introduces criminal consequences and mandates platform accountability through required content removal systems. Following the DEFIANCE Act’s Senate passage this summer, the TAKE IT DOWN Act secured Senate approval in recent days.

The joint campaign – coordinated by Encode and ARI – marks an unprecedented alignment between children’s safety advocates, anti-exploitation experts, and technology policy specialists. Building on this momentum, both organizations unveiled StopAIFakes.com Wednesday, launching a grassroots petition drive to demonstrate public demand for legislative action.

About Encode: Encode is a youth-led organization advocating for safe and responsible artificial intelligence.

Media Contact:

Adam Billen

VP, Political Affairs

Contact: comms@encodeai.org

Petition Urging House to Stop Non-Consensual Deepfakes

FOR IMMEDIATE RELEASE: December 4, 2024

Contact: comms@encodeai.org

Petitions support the DEFIANCE Act and TAKE IT DOWN Act

WASHINGTON, D.C. – On Wednesday, Americans for Responsible Innovation and Encode announced a new petition campaign, urging the House of Representatives to pass protections against AI-generated non-consensual intimate images (NCII) and revenge porn before the end of the year. The campaign, which is expected to gather thousands of signatures over the course of the next week, supports passage of the TAKE IT DOWN ACT and the DEFIANCE Act. Petitions are being gathered at StopAIFakes.com.

The TAKE IT DOWN Act, introduced by Sens. Ted Cruz (R-TX) and Amy Klobuchar (D-MN), criminalizes the publication of non-consensual, sexually exploitative images — including AI-generated deepfakes — and requires online platforms to have in place notice and takedown processes. The DEFIANCE Act was introduced by Sens. Dick Durbin (D-IL) and Lindsey Graham (R-SC) in the Senate and Rep. Alexandria Ocasio-Cortez (D-NY) in the House. The bill empowers survivors of AI NCII — including minors and their families — to take legal action by suing their perpetrators. Both bills have passed the Senate.

“We can’t let Congress miss the window for action on AI deepfakes like they missed the boat on social media,” said ARI President Brad Carson. “Children are being exploited and harassed by AI deepfakes, and that causes a lifetime of harm. The DEFIANCE Act and the TAKE IT DOWN Act are two easy, bipartisan solutions that Congress can get across the finish line this year. Lawmakers can’t be allowed to sit on the sidelines while kids are getting hurt.”

“Deepfake porn is becoming a pervasive part of our schools and communities, robbing our children of the safe upbringing they deserve,” said Encode Vice President of Public Policy Adam Billen. “We owe them a safe childhood free from fear and exploitation. The TAKE IT DOWN and DEFIANCE Acts are Congress’ chance to create that future.”

###

About Encode Justice: Encode is the world’s first and largest youth movement for safe and responsible artificial intelligence. Powered by 1,300 young people across every inhabited continent, Encode Justice fights to steer AI development in a direction that benefits society.

Coalition Letter: The DEFIANCE Act

Encode publishes open letter in support of the DEFIANCE Act with support from industry, civil society, and the education space.

Encode Hosts Capitol Hill Summit on Ending Deepfake Porn

Event featuring deepfake victim Francesca Mani and Sens. Cruz, Klobuchar, and Durbin

Newsom vetoes landmark AI safety bill backed by Californians

Full Article: The Guardian

Governor Gavin Newsom of California recently killed SB1047, a first-of-its-kind artificial intelligence safety bill, arguing that its focus on only the largest AI models leaves out smaller ones that can also be risky. Instead, he says, we should pass comprehensive regulations on the technology.

If this doesn’t sound quite right to you, you’re not alone.

Despite claims by prominent opponents of the bill that “literally no one wants this”, SB1047 was popular – really popular. It passed the California legislature with an average of two-thirds of each chamber voting in favor. Six statewide polls that presented pro and con arguments for the bill show strong majorities in support, which rose over time. A September national poll found 80% of Americans thought Newsom should sign the bill. It was also endorsed by the two most-cited AI researchers alive, along with more than 110 current and former staff of the top-five AI companies.

The core of SB1047 would have established liability for creators of AI models in the event they cause a catastrophe and the developer didn’t take appropriate safety measures.

These provisions received support from at least 80% of California voters in an August poll.

So how do we make sense of this divide?

The aforementioned surveys were all commissioned or conducted by SB1047-sympathetic groups, prompting opponents to dismiss them as biased.

But even when a bill-sympathetic polling shop collaborated with an opponent to test “con” arguments in September, 62% of Californians were in favor.

Moreover, these results don’t surprise me at all. I’m writing a book on the economics and politics of AI and have analyzed years of nationwide polling on the topic. The findings are pretty consistent: people worry about risks from AI, favor regulations, and don’t trust companies to police themselves. Incredibly, these findings tend to hold true for both Republicans and Democrats.

So why would Newsom buck the popular bill?

Well, the bill was fiercely resisted by most of the AI industry, including Google, Meta and OpenAI. The US has let the industry self-regulate, and these companies desperately don’t want that to change – whatever sounds their leaders make to the contrary.

AI investors such as the venture fund Andreessen Horowitz, also known as a16z, mounted a smear campaign against the bill, saying anything they thought would kill the bill and hiring lobbyists with close ties to Newsom.

AI “godmother” and Stanford professor Fei-Fei Li parroted Andreessen Horowitz’s misleading talking points about the bill in the pages of Fortune – never disclosing that she runs a billion-dollar AI startup backed by the firm.

Then, eight congressional Democrats from California asked Newsom for a veto in an open letter, which was first published by an Andreessen Horowitz partner.

The top three names on the congressional letter – Zoe Lofgren, Anna Eshoo, and Ro Khanna – have collectively taken more than $4m in political contributions from the industry, accounting for nearly half of their lifetime top-20 contributors. Google was their biggest donor by far, with nearly $1m in total.

The death knell probably came from the former House speaker Nancy Pelosi, who published her own statement against the bill, citing the congressional letter and Li’s Fortune op-ed.

In 2021, reporters discovered that Lofgren’s daughter is a lawyer for Google, which prompted a watchdog to ask Pelosi to negotiate her recusal from antitrust oversight roles.

Who came to Lofgren’s defense? Eshoo and Khanna.

Three years later, Lofgren remains in these roles, which have helped her block efforts to rein in big tech – against the will of even her Silicon Valley constituents.

Pelosi’s 2023 financial disclosure shows that her husband owned between $16m and $80m in stocks and options in Amazon, Google, Microsoft and Nvidia.

When I asked if these investments pose a conflict of interest, Pelosi’s spokesperson replied: “Speaker Pelosi does not own any stocks, and she has no prior knowledge or subsequent involvement in any transactions.”

SB1047’s primary author, California state senator Scott Wiener, is widely expected to run for Pelosi’s congressional seat upon her retirement. His likely opponent? Christine Pelosi, the former speaker’s daughter, fueling speculation that Pelosi may be trying to clear the field.

In Silicon Valley, AI is the hot thing and a perceived ticket to fortune and power. In Congress, AI is something to regulate … later, so as to not upset one of the wealthiest industries in the country.

But the reality on the ground is that AI is more a source of fear and resentment. California’s state legislators, who are more down-to-earth than high-flying national Democrats, appear to be genuinely reflecting – or even moderating – the will of their constituents.

Sunny Gandhi of the youth tech advocacy group Encode, which co-sponsored the bill, told me: “When you tell the average person that tech giants are creating the most powerful tools in human history but resist simple measures to prevent catastrophic harm, their reaction isn’t just disbelief – it’s outrage. This isn’t just a policy disagreement; it’s a moral chasm between Silicon Valley and Main Street.”

Newsom just told us which of these he values more.

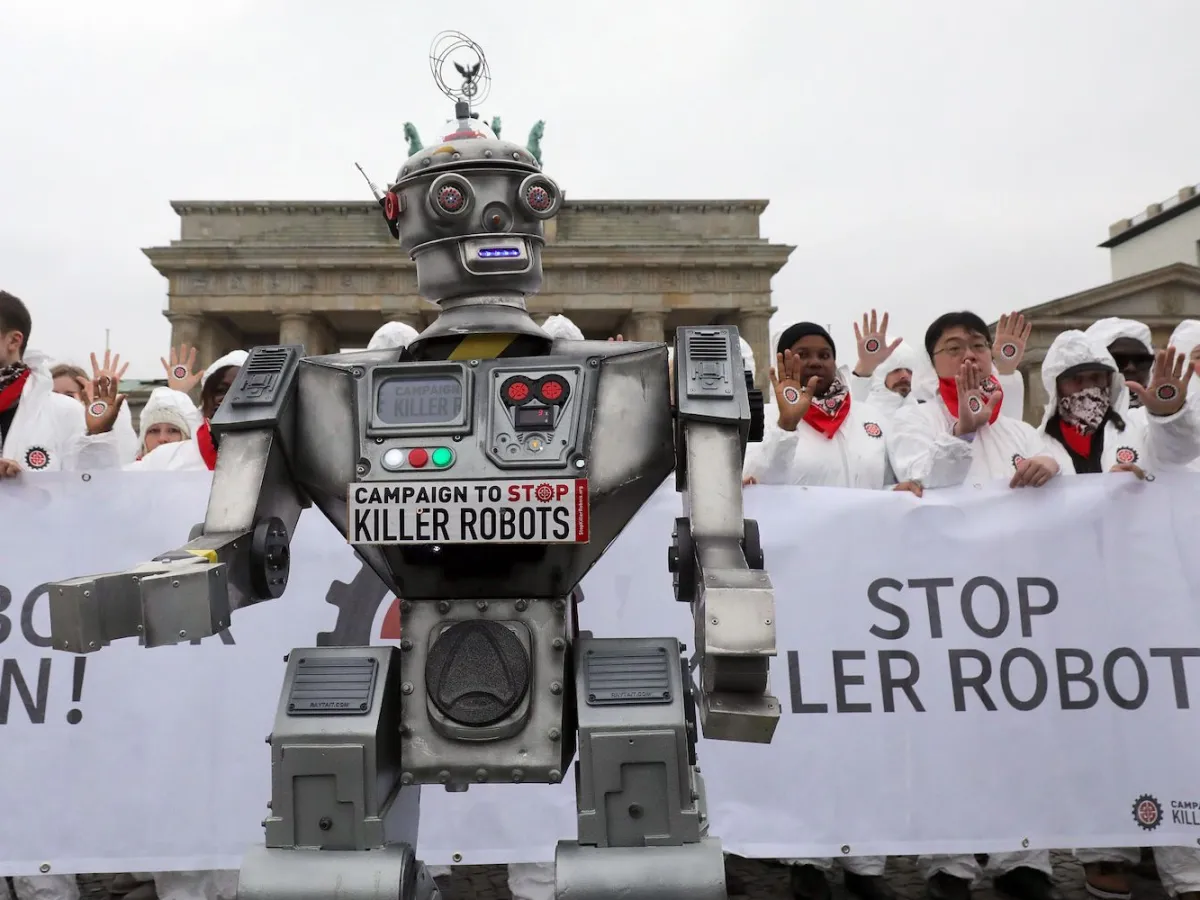

Policy Brief: The Technical Risks of Lethal Autonomous Weapons Systems

Authored by Alycia Colijn (Encode Justice Netherlands) and Heramb Podar (Encode Justice India).